Facebook's

Fake News Problem

Explained

by Timothy B. Leetim@vox.com Nov 16, 2016

by Timothy B. Leetim@vox.com Nov 16, 2016

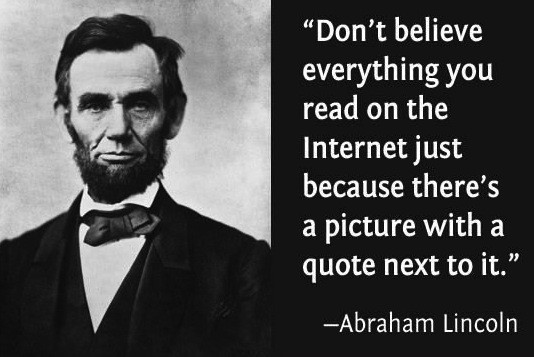

News stories are supposed to help ordinary voters understand the world around them. But in the 2016 election, news stories online too often had the opposite effect. Stories rocketed around the internet that were misleading, sloppily reported, or in some cases totally made up.

Over the course of 2016, Facebook users learned

- that the pope endorsed Donald Trump (he didn’t),

- that a Democratic operative was murdered after agreeing to testify against Hillary Clinton (it never happened),

- that Bill Clinton raped a 13-year-old girl (a total fabrication),

- and many other totally bogus “news” stories.

Stories like this thrive

on Facebook because Facebook’s algorithm prioritizes “engagement” — and a

reliable way to get readers to engage is by making up outrageous

nonsense about politicians they don’t like.

A big problem here is that the internet has broken down

the traditional distinction between professional news-gathering and

amateur rumor-mongering. On the internet, the “Denver Guardian” — a fake news site

designed to look like a real Colorado newspaper — can reach a wide

audience as easily as real news organizations like the Denver Post, the

New York Times, and Fox News.

Since last week’s election, there has been a fierce

debate about whether the flood of fake news — much of it prejudicial to

Hillary Clinton — could have swung the election to Donald Trump.

Internet giants are coming under increasing pressure to do something

about the problem.

On Monday, Google announced

that it was going to cut fake news sites off from access to its vast

advertising network, depriving them of a key revenue source. Facebook

quickly followed suit with its own ad network.

At the same time, CEO Mark Zuckerberg has signaled

reluctance to have Facebook become more active in weeding out fake news

stories. He described it as “a pretty crazy idea”

to think fake news on Facebook could have swayed the election. He says

Facebook will look for new ways to stop the spread of fake news, but he

also argues that “we must proceed very carefully” and that Facebook must

be “extremely cautious about becoming arbiters of truth ourselves.”

The importance of this issue is only going to grow over time. More and more people are getting their news from the internet, putting more and more power in the hands of companies like Google, Twitter, and especially Facebook. The leaders of those companies are going to be under increasing pressure to use that power wisely.<

Fake news wasn’t the biggest media problem of 2016

👇 VIDEO 👇

http://www.vox.com

Fake news is a problem, but we don’t know how big it is

The problem of fake news is so new that we don’t have

definitive data on how big of a problem it is. But there are some

reasons to think it could be very significant.

We know that low-quality news stories have proliferated on Facebook. For example, investigations by BuzzFeed and the Guardian

found that a group of cynical Macedonian hucksters had created dozens

of right-wing news sites that publish low-quality pro-Trump news

stories. Some are plagiarized from other conservative news sites. Others

appear to be totally made up, with headlines like “Proof surfaces that

Obama was born in Kenya,” “Bill Clinton’s sex tape just leaked,” and

“Pope Francis forbids Catholics from voting for Hillary!”

“Yes, the info in the blogs is bad, false, and misleading

but the rationale is that ‘if it gets the people to click on it and

engage, then use it,’” a Macedonian student told BuzzFeed.

Other fake news is generated by partisan bloggers taking

news tidbits out of context and drawing totally wrong conclusions from

them. For example, some confused conservative bloggers misread a leaked

email from Clinton adviser John Podesta as evidence that Democrats were manipulating public poll results.

In fact, Democrats were using a standard polling technique called

oversampling on Democrats’ own internal polls — but that didn’t stop the

story from spreading among online conservatives.

And fake news hasn’t only circulated on the right-hand

side of the political spectrum. A story about Pope Francis endorsing

Bernie Sanders was also made up.

As the internet’s most popular news source, Facebook

appears to have the biggest fake news problem. But it’s not a problem

that only afflicts Facebook. In the wake of last week’s election, one of

the top search results on Google was a post claiming that Trump won the popular vote — he didn’t.

Facebook is worried about being seen as biased against conservatives

Publicly, Facebook’s CEO has downplayed the site’s role

in distributing fake news online. But privately, there’s a raging debate

inside Facebook about how it could do more.

BuzzFeed reports

that some Facebook employees are frustrated by Zuckerberg’s blasé

response to concerns about fake news on the social media platform.

“What’s crazy is for him to come out and dismiss it like

that,” one anonymous engineer wrote, according to BuzzFeed. “He knows,

and those of us at the company know, that fake news ran wild on our

platform during the entire campaign season.”

One reason Facebook’s management has been so cautious on

this issue is that it’s still smarting from the controversy earlier this

year over Facebook’s trending news feature. Until this summer, Facebook

employed a team of professional journalists to curate the trending news

box that appeared in the right-hand rail next to the Facebook newsfeed.

Then in May, one of Facebook’s trending news editors told Gizmodo

that the team was routinely suppressing trending stories that slanted

in a conservative direction. That caused a massive backlash, including questions from Republicans in Congress about Facebook’s editorial policies. This led to Facebook terminating the entire trending news team. Today, Facebook uses software to choose which headlines appear in this box.

But with the human editors gone, Facebook had a new problem: It started to see fake stories

showing up in the trending box. Facebook’s trending news algorithm

simply wasn’t sophisticated enough to distinguish an accurate news story

from an inaccurate one.

The current debate over fake news on Facebook can be seen

as a much broader version of the same controversy. Nobody is going to

defend fake news per se. But once you start injecting human editorial

judgment into content decisions, questions of bias are inevitably going

to come up.

This is a particularly tricky issue because it’s not easy

to draw a line between articles that are totally fake and articles that

are just highly misleading or based on shoddy reporting.

For example, after BuzzFeed reported

that 43 percent of articles from a hyperpartisan site called Right Wing

News were either “mostly false” or a “mix of true and false,” the

site’s editor insisted that many of the articles classified as false

were actually accurate. One story claimed that the Clinton Foundation devoted only 10 percent of its revenue to charity, a figure it got from a Federalist article that only counted grants

to third-party organizations, not charitable activities carried out by

the Clinton Foundation itself, in calculating total charitable spending.

Banning fake news might be the wrong approach

Most of the discussion about how Facebook could address

fake news has assumed that the goal is to banish fake news from the

platforms. But there are a couple of other approaches that might

ultimately work better.

One is that rather than banning fake news, Facebook could

give a boost to high-quality news. It’s hard to say whether Right Wing

News is a fake news site, but it’s easy to say that the New York Times

and the Washington Times are legitimate news sources. And Facebook’s

newsfeed algorithm decides which news stories to show users first.

Giving high-quality news sources a bonus in the newsfeed algorithm could

improve the average quality of news users read without Facebook having

to make tricky judgments about which news is and isn’t fake.

A second approach would be to change how Facebook

presents dubious news stories instead of banning them outright. Right

now, when users post a link, Facebook expands that into a “card” showing

an image, headline, and short sentence describing the article. This

format is standardized so that a New York Times article is formatted in

the same way as an article from a no-name blog.

But Facebook could change that. Instead of presenting an

identical summary card for every link, it could present different kinds

of cards — or no card at all — depending on the perceived quality of the

source. Credible news sources could show full cards like they do now.

Less credible sources could show smaller cards — or no cards at all.

And Facebook could hire a team of fact-checkers to

examine the most widely-shared stories. If a story checks out, Facebook

could show an icon verifying that the story is authentic. If it doesn’t

check out, Facebook could include a prominent link to a story explaining

that the story is inaccurate. Users would still be free to read the

story and disagree with Facebook’s verdict. But they’d at least be aware

that a particular article’s claim is disputed.

Ultimately, some level of controversy is inevitable for a

topic this political. Any effort to crack down on fake news is going to

generate a certain amount of backlash from people whose stories are

labeled as bogus. But critics say Zuckerberg has an obligation to try to

do something to stem the flood of fake news on his platform.

READ THE FULL ARTICLE HERE

:

Next Up

- Facebook is starting to take responsibility for fake news. It could do more.

- Mark Zuckerberg is in denial about how Facebook is harming our politics

5 Fast Facts You Need To Know

1. Facebook is One of America’s Top News Sources

2. Most, But Not All, Fake Stories This Year Were Pro-Trump

3. Many Fake News Stories Originate With Moneymaking Sites Overseas

4. Fake News Stories Also Cover Trump-Related Themes

5. Facebook and Google Are Now Taking Steps to Curb Fake News

This fabricated “news” story claiming that movie star Denzel Washington had endorsed Trump was shared more than 22,000 times on Facebook in two days. (Facebook/American News)

http://heavy.com/news/2016/11/fake-news-facebook-google-donald-trump-bernie-sanders-wins-election/

Trump understands what many miss:

People don’t Make Decisions Based on Facts

How can we make facts matter?

Research in psychology and political science offers a little hope.

by Julia Belluz and Brian Resnick Nov 16, 2016

No comments:

Post a Comment